Abstract

We introduce PartSTAD, a method designed for the task adaptation of 2D-to-3D segmentation lifting. Recent studies have highlighted the advantages of utilizing 2D segmentation models to achieve high-quality 3D segmentation through few-shot adaptation. However, previous approaches have focused on adapting 2D segmentation models for domain shift to rendered images and synthetic text descriptions, rather than optimizing the model specifically for 3D segmentation. Our proposed task adaptation method finetunes a 2D bounding box prediction model with an objective function for 3D segmentation. We introduce weights for 2D bounding boxes for adaptive merging and learn the weights using a small additional neural network. Additionally, we incorporate SAM, a foreground segmentation model on a bounding box, to improve the boundaries of 2D segments and consequently those of 3D segmentation. Our experiments on the PartNet-Mobility dataset show significant improvements with our task adaptation approach, achieving a 7.0%p increase in mIoU and a 5.2%p improvement in mAP50 for semantic and instance segmentation compared to the SotA few-shot 3D segmentation model.

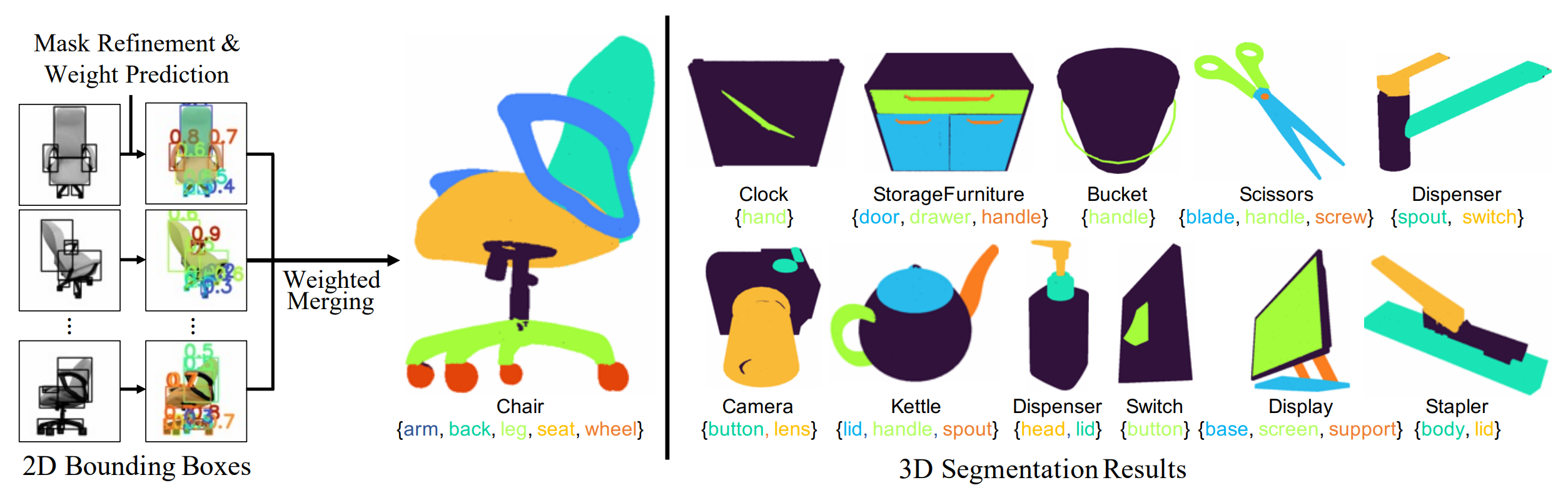

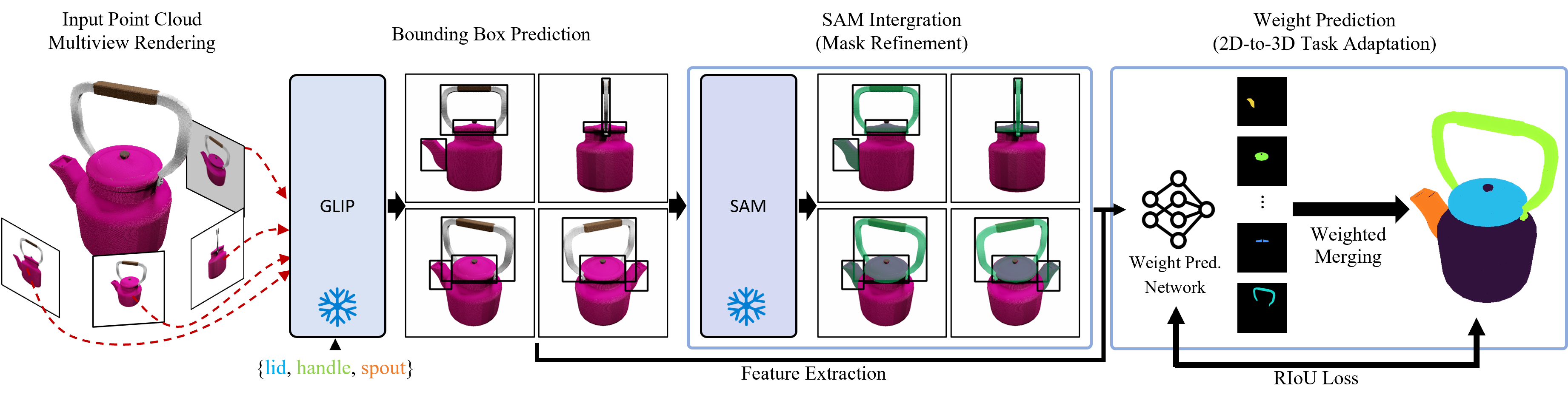

Overall Pipeline

Our approach begins by rendering the provided 3D point cloud from multiple viewpoints. Subsequently, we extract 2D bounding boxes for its parts using GLIP (Bounding Box Prediction); note that we utilize the finetuned GLIP model from PartSLIP . Following this, we convert the bounding boxes into segmentation masks using SAM, extracting the foreground region for each bounding box (SAM Mask Integration). Next, we predict weights for all the masks and adaptively combine them into a 3D representation (2D-to-3D task adaptation). The final step involves obtaining the segmentation label for the input point cloud. The GLIP and SAM models are frozen, while only our novel weight prediction network is trained per category in a few-shot setting (8 objects).

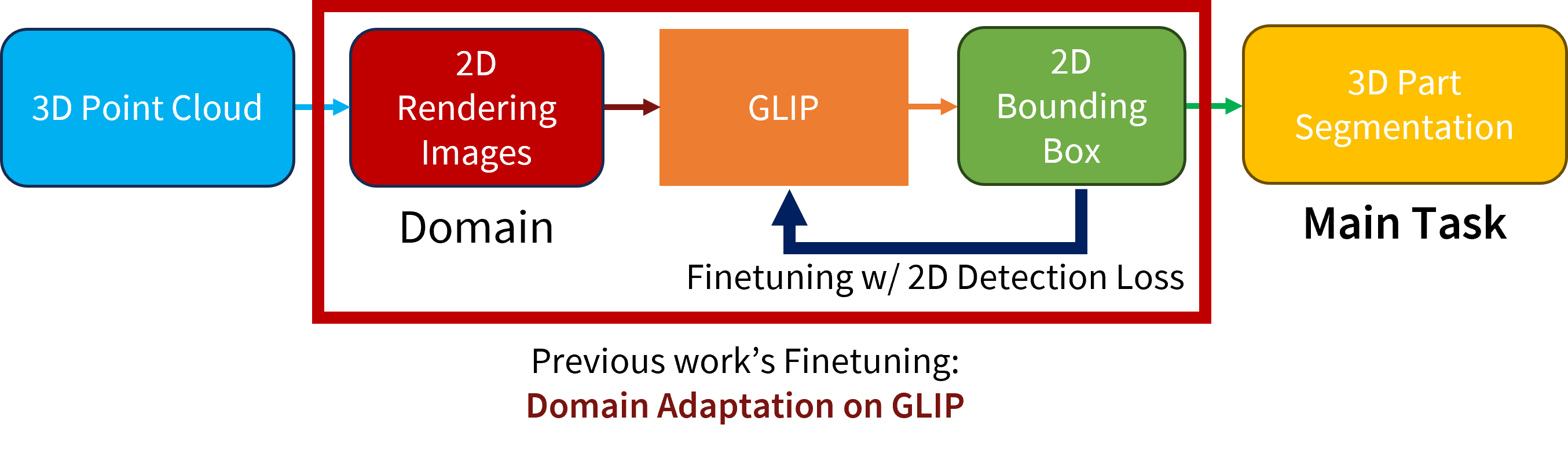

Key Idea: Task Adaptation instead of Domain Adaptation

Although the main task is 3D part segmentation, Previous work finetunes 2D VLM (GLIP) using VLM’s objective instead of main task as an objective. Thus we propose a task adaptation approach that adapts 2D task to 3D task, instead of the conventional domain adaptation, which fails to fully exploiting 3D segmentation results.

However, our new objective function (3D mRIoU Loss) is not differentiable w.r.t GLIP’s output, we propose an alternative approach, Weight Prediction & Score Reformulation. (Please refer to the main paper for details.)

Additionally, we improve performance by using the bounding boxes predicted by GLIP as conditions for SAM to perform mask refinement.